A Behavioral Economics Perspective

Amazon, Facebook, and Google (AFG) and other social networks are collecting information on us to increase the value of the advertisements and products they sell. Some worry in a vague way about how this is reducing our personal privacy. Others increasingly worry that our personal information is being used to manipulate us and to control the flow of information across society.

In a previous post, I described how behavioral economics (specifically Prospect Theory) applies to products and why Steve Jobs’s fanatical dedication to perfection was so unique and valuable for Apple. The same basic ideas from the behavioral sciences apply to other areas, like giving bonuses a bit at a time or why bad news should be confronted all at once.

Here I consider the behavioral principles outlined in the previous post but now as applied to AFG’s and other social network’s business models. The simple description I outline gives some insight into why these companies are so transformational and if we are not thoughtful, so potentially insidious.

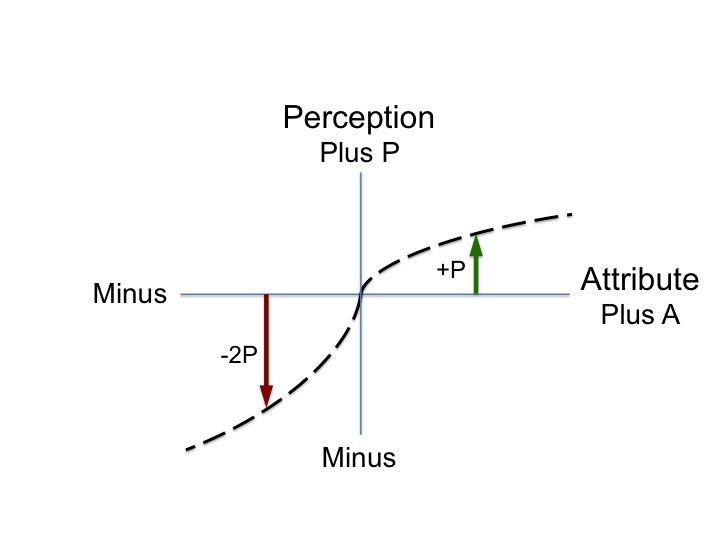

Look first at the figure. Economists use “utility functions” to describe the perceived “goodness” or “badness” of an attribute, such as increasing or decreasing income. The horizontal axis represents an attribute, A, like money, and the vertical axis represents how a person perceives it, P. In general P is a complex function but here I use the simplest model, you either like something or not.

Changes in perceptual quantities are not linear. To notice an equivalent increase in perceived value the attribute must increase exponentiality like Moore’s Law. That is, a noticeable change is a constant fraction. For example, if you turn a light up by 10% you will notice it is brighter. After adapting to the higher light level, the light intensity must be increased by another 10% to create an equivalent perceived change in brightness. These 10% increments in brightness hold roughly true over nine orders of magnitude.

This principle is shown by the curves bending over on the positive and negative sides of the chart. The major difference is that compared to when A is positive, the perceptual result is slightly more than 2X worse when A is negative. That is, if I take $10 away from you it is 2X worse than my giving you $10.

Notice one other thing. Because your perceptual system is always adapting, like to the light level in a room, changes tend to start from zero where you are most sensitive. That is, around zero the slope of the curves is greatest. Thus, the goal is to constantly provide small rewards from zero on the positive side and to avoid them at all cost on the negative side.

What does this have to do with AFG? We can think of them as AI-driven feedback machines designed to maximize the number of small positive rewards they give to their users. Of course, the more they know about us the better they can feed us content that gives us the desired endorphin rushes. That is, they want to constantly reset us to zero and continuously give us new positive micro-experiences. They want us addicted to their services and they have a way to do it.

The negative side is even more interesting. Here, because negatives are so impactful, they must avoid them. They never want to mistakenly feed us the information we don’t like or don’t enjoy. Here again, the knowledge they have about us helps them determine what we see and to avoid such “mistakes”.

Traditional media can only ineffectively filter the information their customer’s see. For example, if you are a fan of the New York Times you will mostly read things that align with your interests. But not always. Some articles are of no interest (a slight perceptual negative because it is a waste of your time) and occasionally you will read something you don’t like or that makes you feel bad. The social positive from traditional media is that society obtains a fuller appreciation for what is going on in the world. But as individals, we mostly don’t have that need.

AFG are potentially orders-of-magnitude better at giving us what we want and limiting content we disagree with or that upsets us. The AFG business models are selective filters that become even more effective over time as they continue learning about us. In the limit, we would only see content we want to consume and only ads for products we want to buy. That limit will never happen but the trend is how AFG’s maximize their business models.

AFG are machines that initially let us see the entire information landscape. But using them over time is a bit like falling into black holes where less and less gets in beyond our immediate interests. And remember, I am only describing one of many behavioral science concepts that can be used to influence what we see and ultimately how we think. It would be fascinating to learn in detail how these companies are advancing the frontiers of behavioral economics to expand their business models.

Clearly, the world has never before confronted a system that represents the convergence of behavioral science, massive amounts of personal and other data, real-time feedback of all types, and super-human levels of computer intelligence. It is all very impressive, but what will these systems eventually do to us and to society? What do you think?

PS. Especially Amazon and Google provide unprecedented value to society. More generally they can identify individuals and uniquely understand what they want, what they can do, and how to motivate them. How can these enormous and improving capabilities be used to address other major problems in society, such as education, healthcare, finding jobs (especially for those of special abilities, which i4j calls cool-abilities), and locating young entrepreneurs (what Gallup is doing) to help grow the economy?